AI-native means something specific. These applications build on AI as their foundation from the start, unlike traditional software that adds AI features later. They enable smart automation, immediate analytics, and tailored experiences. The market shows strong interest in this approach. Funding for GenAI native applications reached $8.5 billion through October 2024. At least 47 AI-native companies now generate over $25 million in annual recurring revenue.

Success stories now reach beyond code assistance and customer support into healthcare, finance, and other specialized fields. Healthcare stands out with its most important transformation through AI-native solutions that boost efficiency and accuracy in medical image analysis. Successful organizations focus on reimagining processes rather than simple automation. They create new operational models that deliver exceptional value.

This piece will help you understand how AI native infrastructure is different from traditional approaches. You'll see real-life examples of companies building on AI-native principles and learn a framework to apply AI effectively in your enterprise.

Core Principles of AI-Native Architecture

AI-native systems need a completely different architectural approach compared to traditional software design. Intelligence sits at the heart of these systems rather than being added later.

- Data-centric and model-driven design

Quality data is the foundation of successful AI-native systems. Data-centric AI views datasets as dynamic assets that need constant development, not just static inputs. This approach focuses on improving data systematically rather than tweaking models. Better results often come from this method as models become more standardized.

Model-driven architecture provides vital abstraction layers that reduce development complexity. Developers can create conceptual models of real-life objects and software components. This method lets them represent everything—from databases to natural language processing engines—as models. The number of elements they need to understand drops dramatically from 10^13 to just 10^3.

- Continuous learning and feedback loops

AI-native systems must adapt to changing environments without needing explicit reprogramming. Models can process new data incrementally through continuous learning and stay relevant in dynamic situations. But this method needs careful implementation. New learning could override previously acquired knowledge, a problem known as catastrophic forgetting.

Good AI-native architectures include feedback loops that help systems improve their operations based on results. These loops let AI review outcomes, adjust its approach, and boost decision quality over time.

- Distributed intelligence and orchestration

Distributed intelligence is the life-blood of AI-native design. It processes AI workloads together across devices, edge, and central cloud. This method utilizes distributed computing resources to handle massive datasets while staying responsive.

AI orchestration coordinates these distributed parts. It manages model deployment, implementation, and maintenance of integrations. Automated workflows, progress tracking, resource management, and failure handling create unified systems that work better and scale easily.

- Zero-touch provisioning and real-time inference

Zero-touch provisioning sets up devices automatically without manual work. This speeds up deployment and cuts down human error. AI makes this process better through predictive analytics. It spots potential issues early and optimizes workflows based on past data.

Real-time inference is the action layer of AI-native systems. It uses trained models to generate immediate predictions on new data. This happens either through remote inference (API calls to model servers) or embedded inference (models built right into applications). Each method balances differently between speed and centralized control.

From Cloud-Native to AI-Native: The Evolution

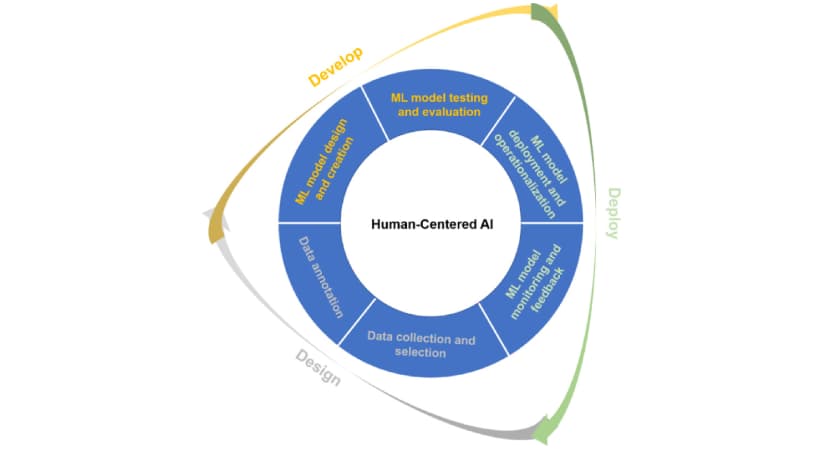

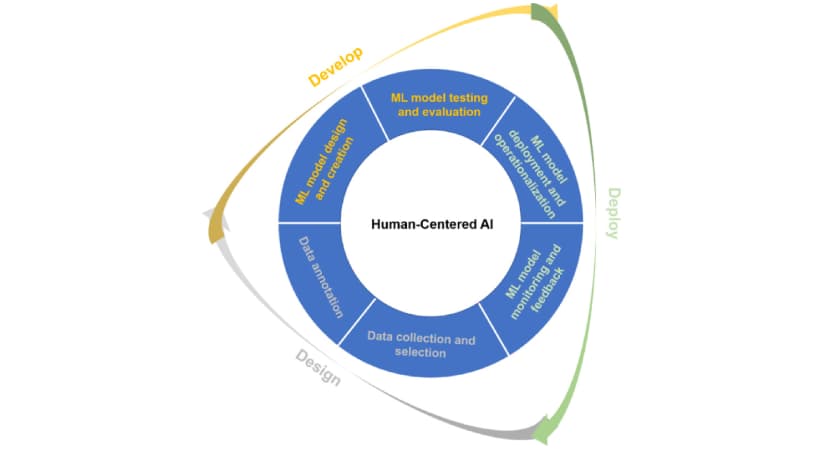

Image Source:

The rise of AI-native applications relies heavily on cloud-native technologies. This progress shows how better infrastructure creates opportunities for smarter systems.

- How cloud-native infrastructure enables AI-native systems

Cloud-native infrastructure serves as the foundation for AI-native systems. Industry experts believe that being cloud-native must come before becoming truly AI-native. Companies typically need several core components to start this trip: microservices architecture, containerized deployment, continuous integration/delivery pipelines, and detailed observability.

These basic elements provide the scalability and flexibility that advanced AI workloads need. The Cloud Native Computing Foundation (CNCF) includes this connection in its definition of Cloud Native Artificial Intelligence as "approaches and patterns for building and deploying AI applications using cloud-native principles".

- Microservices, containers, and AI model orchestration

Microservices architecture has changed how AI systems work by breaking down big applications into smaller, independent parts. This approach makes systems much more resilient because failures stay isolated to single services. It also lets AI agents communicate on their own to handle service interactions, balance workloads, and predict system failures.

Kubernetes has become essential for AI-native development. It's now the fastest-growing project in open-source software history after Linux. The market size was $1.46 billion in 2019 and should grow 23.4% yearly until 2031. Big AI companies like OpenAI, Spotify, and Uber use Kubernetes because it handles complex computational needs well.

- Serverless and edge computing for AI-native workloads

Serverless computing solves major AI scaling challenges through on-demand resources. This market was worth $8.01 billion in 2022 and should reach $50.86 billion by 2031. Serverless functions can run multiple instances at once, which works great for parallel AI workloads.

Edge AI processes data on devices locally. It offers three main benefits: faster real-time decisions, better data privacy, and less bandwidth use. You can see this in action with self-driving cars, smart homes, healthcare monitoring, and industrial automation. These applications show how AI-native systems keep growing and improving.

Real-World Examples of AI-Native Applications

Leading companies have built AI-native architectures that surpass traditional automation. Let's get into how these organizations reshape their industries through AI-centric design.

- Upstart : AI-native lending and credit scoring

Upstart AI platform has reshaped lending decisions by looking beyond standard credit scores. The platform's AI-native approach approves 44.28% more borrowers at 36% lower APRs than traditional models. The platform's effect on underrepresented groups stands out - it approves 35% more Black borrowers and 46% more Hispanic borrowers compared to traditional models. About 90% of Upstart-powered loans got instant approval without documentation requirements in 2024. This shows how AI-native design makes lending both streamlined and inclusive.

- Voicenotes : AI-native productivity and summarization

Voicenotes Sweden hows AI-native design at its best in productivity tools. The

BuyMeACoffee.com team's application turns voice notes into text using advanced speech recognition. Voicenotes stands out as truly AI-native because it studies content and creates summaries, pulls out action items, and suggests follow-up tasks from meetings. The platform learns from how people use it and adapts to their specific needs, which creates a more tailored experience.

- Tempus AI s : AI-native healthcare and precision medicine

Tempus leads AI-native healthcare with its precision medicine platform that links to more than 50% of practicing oncologists in the US. The company's xT platform blends molecular profiling with clinical data to find targeted therapies and clinical trials. The company also launched "olivia," an AI health concierge app that brings together patient data from over 1,000 health systems and creates AI-generated health information summaries. Tempus shows how AI-native design can build new healthcare models from scratch.

- Glean : AI-native enterprise search and knowledge graphs

Glean's enterprise search makes use of a detailed knowledge graph to grasp relationships between content, people, and activity across organizations. Through 100+ connectors, Glean builds unique knowledge graphs for each client that weigh information connections among many other signals. This AI-native approach helps employees save up to 10 hours yearly and cuts internal support requests by 20%. Companies usually earn back their Glean investment within six months, which proves the clear ROI of well-designed AI-native applications.

A Framework for Applying AI in the Enterprise

Companies that succeed with AI-native approaches follow specific patterns that are different from traditional digital transformation. By looking at these organizations, we can create a practical framework that works.

- Start with AI capabilities, not human limitations

When I think about AI implementation, possibilities matter more than automating existing processes. Many organizations make the mistake of applying AI to processes built around human constraints. This limits what AI can achieve. The focus should be on AI's unique abilities—like processing billions of data points at once or finding patterns that humans can't see. McKinsey research shows successful organizations use reinforcement learning and transfer learning to surpass the natural and social limits of human-based systems.

- Map customer journeys to AI-native workflows

Digital transformation has broken consumer trips into unpredictable, nonlinear patterns. These complex paths need AI-native implementations to map them properly. BCG's research shows that influence maps—powered by AI—can show how brands affect decisions. These maps help create strategies for unique consumer trips. Marketers can then prioritize investments across different behavior types while AI directs spending to high-impact touchpoints at key moments.

- Measure success beyond cost reduction

AI projects typically report technical metrics—precision, recall, and lift—but these don't show business value. These metrics compare performance to baselines without showing real business effects. Business metrics like revenue, profit, savings, and new customers should come first. Organizations making this change assess their AI systems not just by financial returns but by how they improve agility, decision-making, and competitive strength.

- Build feedback loops into every layer

AI-native architectures need systems that learn continuously. Feedback loops—algorithms that spot errors in output and use corrections as input—help systems become more accurate over time. Teams should create two-way influence between quick-moving parts (applications) and slow-moving elements (culture, governance). This balanced approach recognizes that change happens at different speeds while keeping all parts working together.

Conclusion

Our deep dive into AI-native applications shows how organizations successfully move beyond basic automation to reimagine their entire processes. Companies that achieve meaningful transformation know that simply adding AI to existing systems produces limited results. True success comes when intelligence forms the foundation of system design rather than being an afterthought.

AI-native architecture rests on core principles: data-centric design, continuous learning, distributed intelligence, and real-time inference. These principles build naturally on cloud-native technologies and show the natural progress in computational capability and design philosophy.

Examples like

Upstart,

Voicenotes,

Tempus AI, and

Glean demonstrate how AI-native thinking can revolutionize businesses. These companies didn't just add AI features to existing products - they completely reimagined their approach with AI at the core. This created value propositions that traditional methods couldn't achieve.

A practical roadmap emerges from our framework. It starts with AI capabilities rather than human limitations, maps customer trips to AI workflows, measures success beyond cost reduction, and builds detailed feedback loops. Companies that do this position themselves to create applications delivering unprecedented value.

The difference between AI-enhanced and truly AI-native applications grows more significant daily. Many companies claim to integrate AI, but those who reimagine their processes around AI capabilities will lead the next generation. The future belongs to innovators who rethink what's possible when intelligence becomes native to application design.